RecapPrevious section introduced Langevin Dynamics, a special diffusion process that aims to generate samples from a distribution . It is defined as:

or equivalently

where could be roughly treated as , where is a standard Gaussian random variable. is the score function. The Langevin dynamics for acts as an identity operation on the distribution, transforming samples from into new samples from the same distribution.

In this section, we present the key processes of Denoising Diffusion Probabilistic Models (DDPMs):

- Forward Diffusion Process: How DDPMs gradually corrupt an image into pure Gaussian noise

- Backward Diffusion Process: How DDPMs generate images by gradually denoising pure Gaussian noise

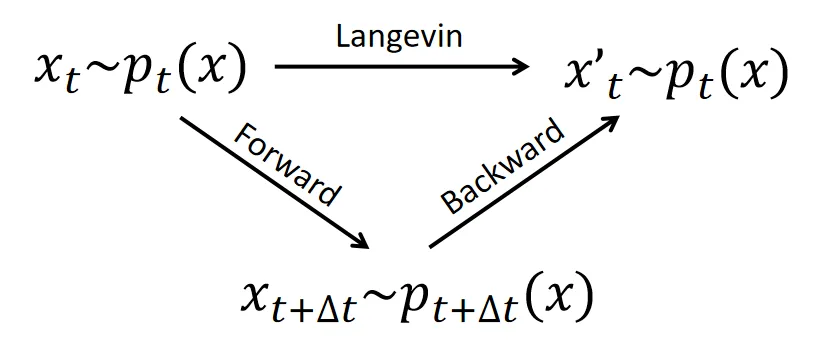

We will show how to derive the backward diffusion process from the forward process with the help of the triangle relation:

Prerequisites: Calculus, SDE and Langevin Dynamics.

Spliting the Identity: Forward and Backward Processes in DDPM

The Denoising Diffusion Probabilistic Models (DDPMs) 1 are models that generate high-quality images from noise via a sequence of denoising steps. Denoting images as random variable of the probabilistic density distribution , the DDPM aims to learn a model distribution that mimics the image distribution and draw samples from it. The training and sampling of the DDPM utilize two diffusion process: the forward and the backward diffusion process.

The Forward Diffusion Process

The forward diffusion process in DDPM generates the necessary training data: clean images and their progressively noised counterparts. It gradually adds noise to existing images using the Ornstein-Uhlenbeck diffusion process (OU process) 2 within a finite time interval . The OU process is defined by the stochastic differential equation (SDE):

in which is the forward time of the diffusion process, is the noise contaminated image at time , and is a Brownian noise.

Note that is just the score function of the standard Gaussian distribution . Thus, the forward diffusion process corresponds to the Langevin dynamics of the standard Gaussian .

The forward diffusion process has as its stationary distribution. This means, for any initial distribution of positions , their density converges to as . When these positions represent vectors of clean images, the process describes a gradual noising operation that transforms clean images into Gaussian noise.

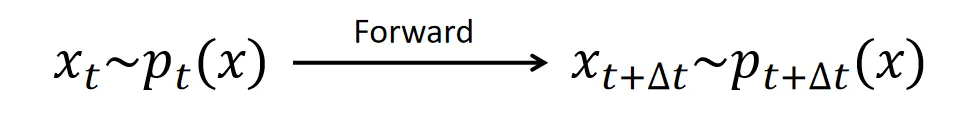

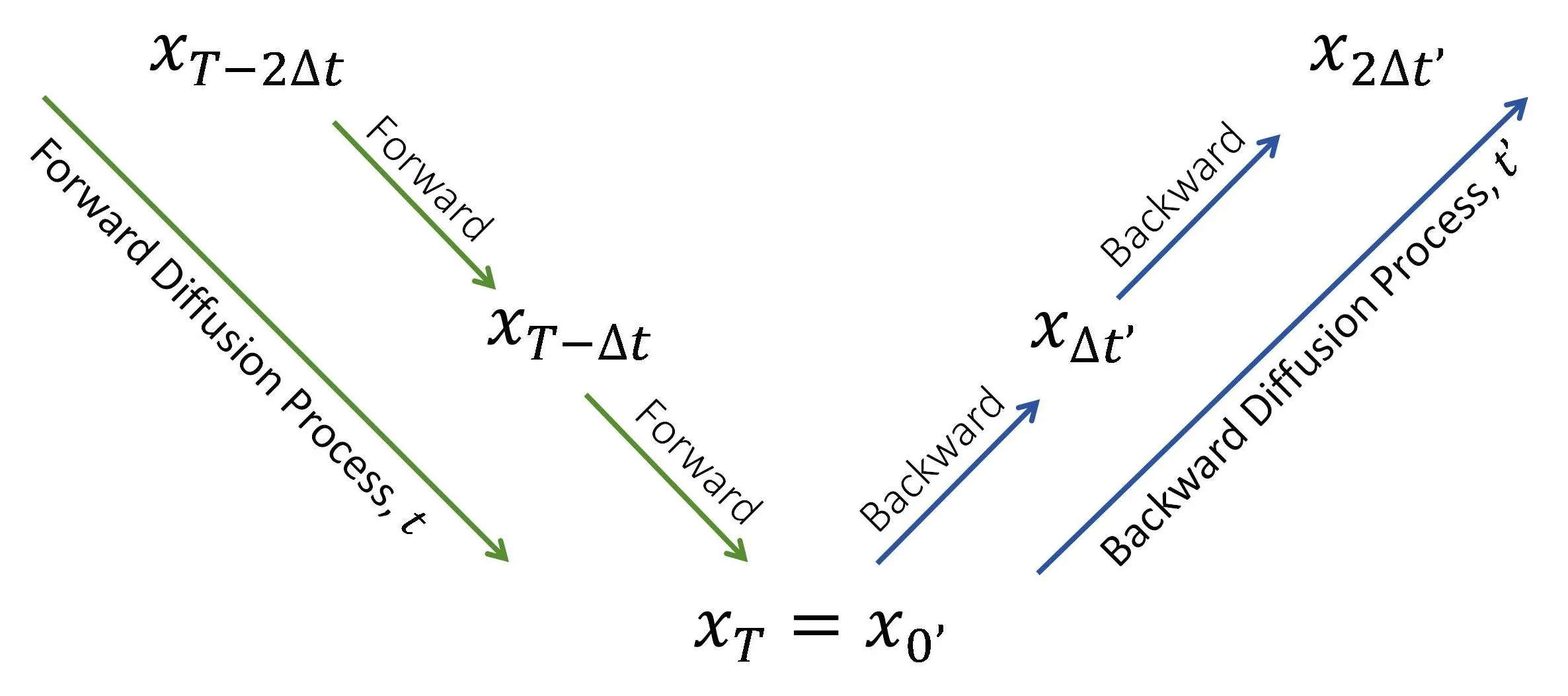

One forward diffusion step with a step size of is displayed in the following picture.

The Backward Diffusion Process

The backward diffusion process is the conjugate of the forward process. While the forward process evolves toward , the backward process reverses this evolution, restoring to .

To derive it, we employ Langevin dynamics as a stepping stone, which provides a starightforward way to obtain the backward diffusion process:

NOTEfunctions as an “identity” operation with respect to a distribution. Given that the backward process is the reverse of the forward process, the composition of the forward and backward process at time must therefore reproduce the Langevin dynamics for , as shown in the following picture

To formalize this, consider the Langevin dynamics for with a distinct time variable , distinguished from the forward diffusion time . This dynamics can be decomposed into forward and backward components as follows:

where is the score function of . We have utilized the property that .

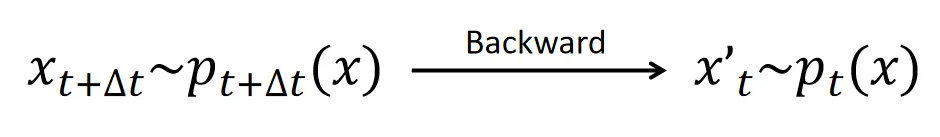

The “Forward” part in this decomposition corresponds to the forward diffusion process, effectively increasing the forward diffusion time by , bringing the distribution to . Since the forward and backward components combine to form an “identity” operation, the “Backward” part must reverse the forward process—decreasing the forward diffusion time by and restoring the distribution back to .

Now we can define the backward process according to the backward part in the equation above, and a backward diffusion time different from the forward diffusion time :

One step of this backward diffusion process with acts as a reversal of the forward process.

The backward diffusion process itself is also a standalone SDE that advances the backward diffusion time . If , then one step of the backward diffusion process with brings it to .

These two interpretations help us determine the relationship between the forward diffusion time and the backward diffusion time . Since is interpreted as a “decrease” in the forward diffusion time , as well as a “increase” of the backward diffusion time , we have

which means the backward diffusion time is the inverse of the forward. To make lies in the same range of the forward diffusion time, we define . In this notation, the backward diffusion process 3 is

in which is the backward time, is the score function of the density of in the forward process.

Forward-Backward Duality

We have previously shown that a backward step is the reverse of a forward step: advancing time in the backward process corresponds to receding time by the same amount in the forward process. What then occurs when we chain together a series of forward and backward steps? Consider the following process: start with , evolve it via the to , then take as the initial position of the and evolve it to . This sequence is illustrated in the figure below.

The green arrows represent consecutive forward process steps that advance the forward diffusion time , while the blue arrows indicate consecutive backward process steps that advance the backward diffusion time .

The green arrows represent consecutive forward process steps that advance the forward diffusion time , while the blue arrows indicate consecutive backward process steps that advance the backward diffusion time .

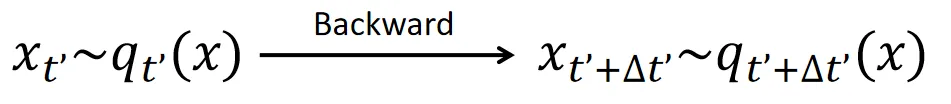

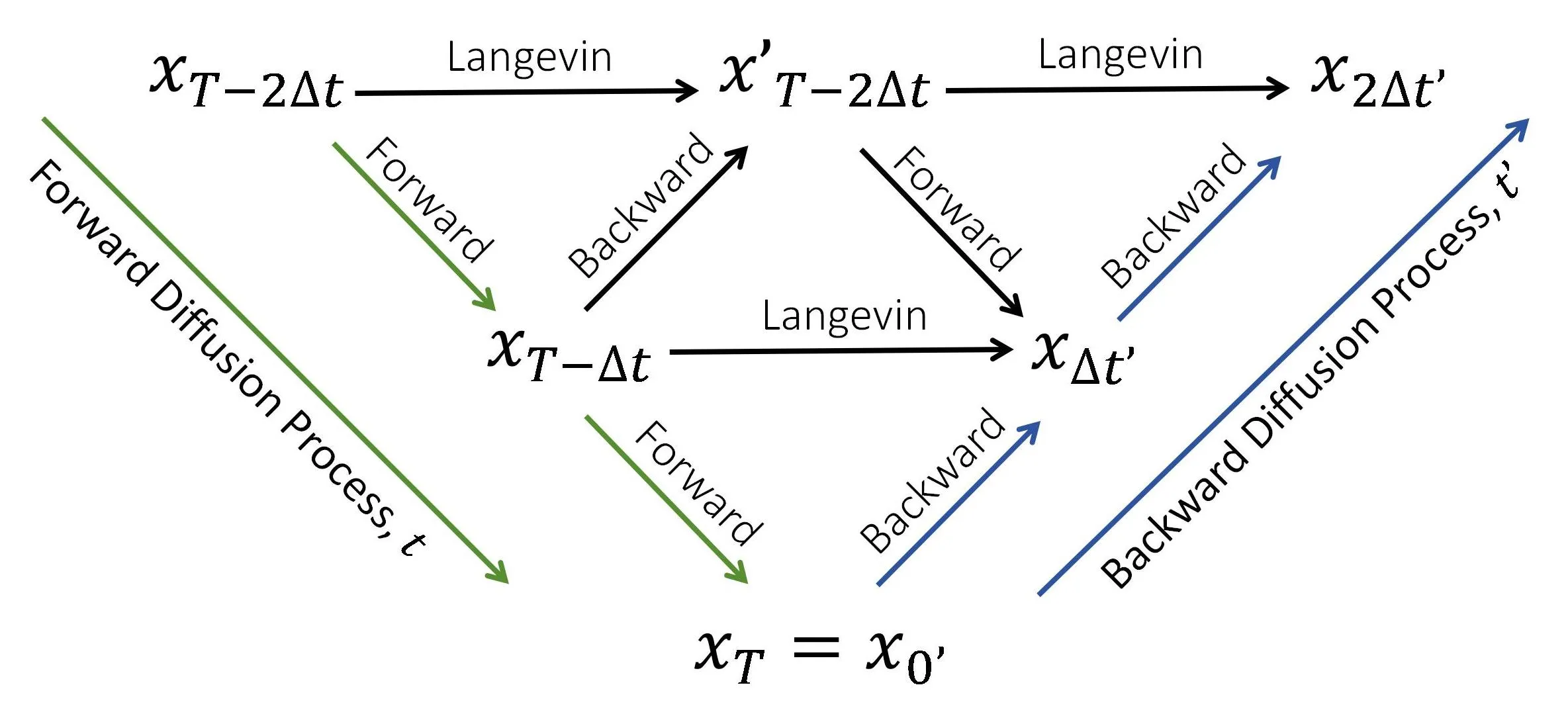

We examine the relationship between in the forward diffusion process and in the backward diffusion process. The composition of a forward and a backward step constitutes a Langevin dynamics step. This allows us to connect in the forward process with those in the backward process through Langevin dynamics steps, as illustrated below:

Each horizontal row in this picture corresponds to consecutive steps of Langevin dynamics, which alters the samples while maintaining the same probability density. This illustrates the duality between the forward and backward diffusion processes: while (forward) and (backward) are distinct samples, they obey the same probability distribution.

TIPIt’s important to note that the backward diffusion process does not generate identical samples to the forward process; rather, it produces samples according to the same probability distribution, due to the identity property of Langevin dynamics.

To formalize the duality, we define the densities of (forward) as , the densities of (backward) as . If we initialize

then their evolution are related by

For large , converges to . Thus, the backward process starts at with and, after evolving to , generates samples from the data distribution:

This establishes an exact correspondence between the forward diffusion process and the backward diffusion process, indicating that the backward diffusion process can generate image data from pure Gaussian noise.

What is Next

We demonstrated that backward diffusion—the dual of the forward process—can generate image data from noise. However, this requires access to the score function at every timestep . In practice, we approximate this function using a neural network. In the next section, we will explain how to train such score networks.

Stay tuned for the next installment!

Discussion

If you have questions, suggestions, or ideas to share, please visit the discussion post.

Cite this blog

This blog is a reformulation of the appendix of the following paper.

@misc{zheng2025lanpainttrainingfreediffusioninpainting, title={LanPaint: Training-Free Diffusion Inpainting with Asymptotically Exact and Fast Conditional Sampling}, author={Candi Zheng and Yuan Lan and Yang Wang}, year={2025}, eprint={2502.03491}, archivePrefix={arXiv}, primaryClass={eess.IV}, url={https://arxiv.org/abs/2502.03491},}Footnotes

-

Ho, J., Jain, A., & Abbeel, P. (2020). Denoising diffusion probabilistic models. Advances in neural information processing systems, 33, 6840-6851. ↩

-

Uhlenbeck, G. E., & Ornstein, L. S. (1930). On the theory of the Brownian motion. Physical Review, 36(5), 823–841. ↩

-

Anderson, B. D. O. (1982). Reverse-time diffusion equation models. Stochastic Processes and their Applications, 12(3), 313–326. ↩